A sweeping analysis of the Common Crawl dataset—a cornerstone of training data for large language models (LLMs) like DeepSeek—has uncovered 11,908 live API keys, passwords, and credentials embedded in publicly accessible web pages.

The leaked secrets, which authenticate successfully with services ranging from AWS to Slack and Mailchimp, highlight systemic risks in AI development pipelines as models inadvertently learn insecure coding practices from exposed data.

Researchers at Truffle Security traced the root cause to widespread credential hardcoding across 2.76 million web pages archived in the December 2024 Common Crawl snapshot, raising urgent questions about safeguards for AI-generated code.

The Common Crawl dataset, a 400-terabyte repository of web content scraped from 2.67 billion pages, serves as foundational training material for DeepSeek and other leading LLMs.

When Truffle Security scanned this corpus using its open-source TruffleHog tool, it discovered not only thousands of valid credentials but troubling reuse patterns.

For instance, a single WalkScore API key appeared 57,029 times across 1,871 subdomains, while one webpage contained 17 unique Slack webhooks hardcoded into front-end JavaScript.

Mailchimp API keys dominated the leak, with 1,500 unique keys enabling potential phishing campaigns and data theft.

To process Common Crawl’s 90,000 WARC (Web ARChive) files, Truffle Security deployed a distributed system across 20 high-performance servers.

Each node downloaded 4GB compressed files, split them into individual web records, and ran TruffleHog to detect and verify live secrets.

To quantify real-world risks, the team prioritized verified credentials—keys that actively authenticated with their respective services.

Notably, 63% of secrets were reused across multiple sites, amplifying breach potential.

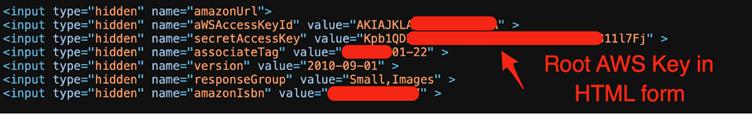

This technical feat revealed startling cases like an AWS root key embedded in front-end HTML for S3 Basic Authentication—a practice with no functional benefit but grave security implications.

Researchers also identified software firms recycling API keys across client sites, inadvertently exposing customer lists.

While Common Crawl’s data reflects broader internet security failures, integrating these examples into LLM training sets creates a feedback loop.

Models cannot distinguish between live keys and placeholder examples during training, normalizing insecure patterns like credential hardcoding.

This issue gained attention last month when researchers observed LLMs repeatedly instructing developers to embed secrets directly into code—a practice traceable to flawed training examples.

The Verification Gap in AI-Generated Code

Truffle Security’s findings underscore a critical blind spot: even if 99% of detected secrets were invalid, their sheer volume of training data skews LLM outputs toward insecure recommendations.

For instance, a model exposed to thousands of front-end Mailchimp API keys may prioritize convenience over security, ignoring backend environment variables.

This problem persists across all major LLM training datasets derived from public code repositories and web content.

In response, Truffle Security advocates for multilayered safeguards. Developers using AI coding assistants can implement Copilot Instructions or Cursor Rules to inject security guardrails into LLM prompts.

For example, a rule specifying “Never suggest hardcoded credentials” steers models toward secure alternatives.

On an industry level, researchers propose techniques like Constitutional AI to embed ethical constraints directly into model behavior, reducing harmful outputs.

However, this requires collaboration between AI developers and cybersecurity experts to audit training data and implement robust redaction pipelines.

This incident underscores the need for proactive measures:

As LLMs like DeepSeek become integral to software development, securing their training ecosystems isn’t optional—it’s existential.

The 12,000 leaked keys are merely a symptom of a deeper ailment: our collective failure to sanitize the data shaping tomorrow’s AI.

Collect Threat Intelligence on the Latest Malware and Phishing Attacks with ANY.RUN TI Lookup -> Try for free

Security Operations Centers (SOCs) are facing a mounting crisis: alert fatigue. As cyber threats multiply…

The Sysdig Threat Research Team (TRT) has revealed a significant evolution in the offensive capabilities…

Living-off-the-Land (LOTL) attacks have become a cornerstone of modern cyber threats, allowing malware to evade…

The cybersecurity landscape of 2025 is witnessing a troubling resurgence of malicious macros in phishing…

Threat actors are increasingly targeting Node.js—a staple tool for modern web developers—to launch sophisticated malware…

Oracle Corporation has released a sweeping Critical Patch Update (CPU) for April 2025, addressing a…