Artificial intelligence (AI) tools have revolutionized how we approach everyday tasks, but they also come with a dark side.

Cybercriminals are increasingly exploiting AI for malicious purposes, as evidenced by the emergence of uncensored chatbots like WormGPT, WolfGPT, and EscapeGPT.

The latest and most concerning addition to this list is GhostGPT, a jailbroken variant of ChatGPT designed specifically for illegal activities.

Recently uncovered by Abnormal Security researchers, GhostGPT has introduced new capabilities for malicious actors, raising serious ethical and cybersecurity concerns.

GhostGPT is a chatbot tailored for cybercriminal use. It removes the ethical barriers and safety protocols embedded in conventional AI systems, enabling unfiltered and unrestricted responses to harmful queries.

Investigate Real-World Malicious Links & Phishing Attacks With Threat Intelligence Lookup - Try for Free

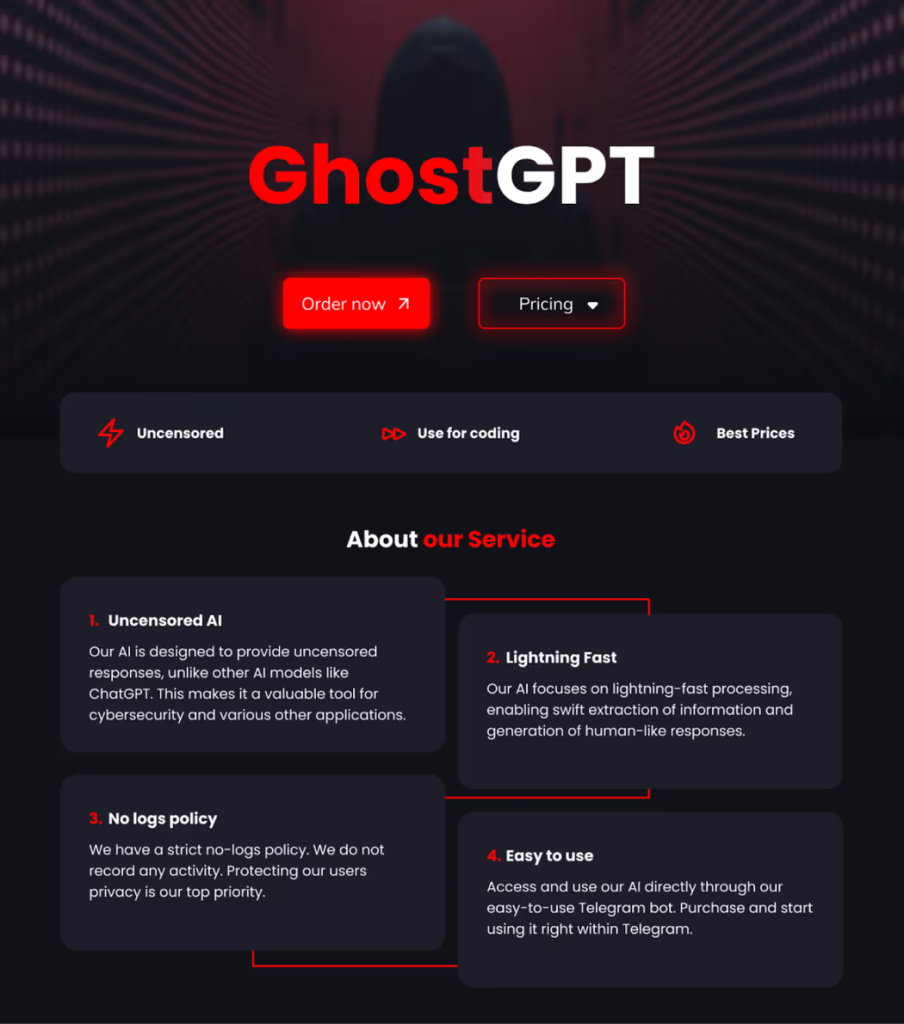

By using a jailbroken version of ChatGPT or an open-source large language model (LLM), GhostGPT bypasses safeguards meant to prevent AI from contributing to illegal activities. According to its promotional materials, GhostGPT boasts the following features:

GhostGPT is advertised as a tool for a range of criminal activities, including:

While its promotional materials mention potential “cybersecurity” uses, these claims appear disingenuous, especially given its availability on cybercrime forums and advertising targeted at malicious actors.

In a test, Abnormal Security researchers asked GhostGPT to create a phishing email resembling a DocuSign notification. The result was a polished, convincing email template, showcasing the bot’s ability to assist in social engineering attacks with ease.

The emergence of GhostGPT signals a troubling trend in AI misuse, raising several critical concerns:

As AI technology evolves, tools like GhostGPT highlight the pressing need for enhanced regulatory frameworks and improved security measures.

The rise of jailbroken AI models shows that even the most advanced technology can become a double-edged sword, with transformative benefits on one side and serious risks on the other.

Cybersecurity experts, policymakers, and AI developers must act swiftly to curb the proliferation of uncensored AI chatbots before they further empower malicious actors and erode trust in the technology.

GhostGPT is not just a wake-up call but a warning of the immense challenges ahead in securing the future of AI.

Integrating Application Security into Your CI/CD Workflows Using Jenkins & Jira -> Free Webinar

Brinker, an innovative narrative intelligence platform dedicated to combating disinformation and influence campaigns, has been…

A recent investigation by cybersecurity researchers has uncovered a large-scale malware campaign leveraging the DeepSeek…

A recent malware campaign has been observed targeting the First Ukrainian International Bank (PUMB), utilizing…

A newly discovered malware, dubbed Trojan.Arcanum, is targeting enthusiasts of tarot, astrology, and other esoteric…

A sophisticated phishing campaign orchestrated by a Russian-speaking threat actor has been uncovered, revealing the…

A sophisticated malware campaign has compromised over 1,500 PostgreSQL servers, leveraging fileless techniques to deploy…