Light Commands, a new attack that lets an attacker inject arbitrary audio signals into voice assistants by using light from a very long distance.

Security researchers from the University of Electro-Communications & Michigan discovered the new class of the injection attack dubbed “Light Commands” a vulnerability in MEMS microphones that allow attackers to inject inaudible and invisible commands into voice assistants.

To launch an attack, the attacker needs to transmit a light modulated audio signal, which later converts to the original audio signal within a microphone.

Audio Injection Using Laser Light

Researchers identified a vulnerability in MEMS (micro-electro-mechanical systems) microphone, that responds to light if it has a sound, by exploiting this sound can be injected into microphones by modulating the amplitude of laser light.

Attackers can remotely send invisible and inaudible signals to smart home devices such as Alexa, Portal, Google assistant or Siri. The Voice Controller systems lack authentication mechanisms, which allows an attacker to hijack the devices and they can perform the following functions.

- Control smart home switches

- Open smart garage doors

- Make online purchases

- Remotely unlock and start certain vehicles

- Open smart locks by stealthily brute-forcing the user’s PIN

To exploit the attack no physical access or user interaction is required, all attacker needs to have is the line of sight access to the target device and its microphone ports. Researchers confirm that attack works with 110 meters long and published a PDF paper with details.

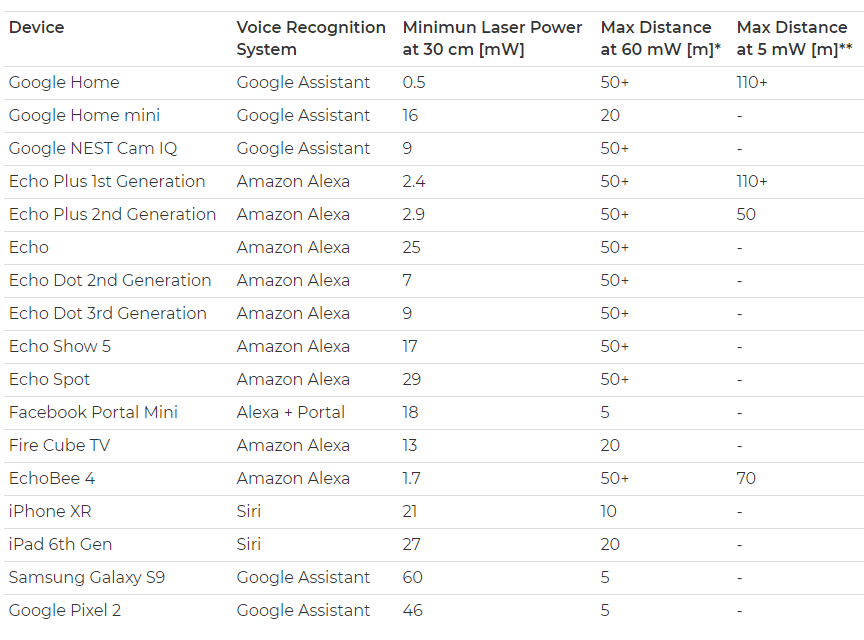

The important concern with the attack type is careful aiming is required for light commands to work, researchers found following voice recognition systems are suspectable to the attacks.

Attackers mount the attack using a simple laser pointer, laser driver, sound amplifier and a telephoto lens. To build the setup it costs $584.93. Researchers confirmed that this light-based injection vulnerability was not maliciously exploited.

Light Commands – Mitigations Suggested

Researchers suggested to set up a PIN or a security question before executing the commands.

By applying physical barriers, you can restrict light waves reaching the devices.

The LightCommands attack was demonstrated on many voice-controllable systems such as Siri, Portal, Google Assistant, and Alexa. The attack successful at a maximum distance of 100M and even penetrate through the glass window.

You can follow us on Linkedin, Twitter, Facebook for daily Cybersecurity and hacking news updates

Also Read

Hackers Can Exfiltrate & Transfer the Sensitive Data using Smart Bulbs Lights

New Research Shows Smart Light Can Be Used To Steal User’s Private Data Invisibly

.png

)